Back in the eighteenth century, there was a good deal of interest in creating automata, and, like today, it signaled a shifting understanding of the human. Two major tech blogs have recently featured a couple of such projects coming out of Japan. I know between little and nothing about the technical strengths and weaknesses of these robots, or the purposes they are designed to serve. But as an outside observer, I found the contrast between them, and the reactions to them, instructive.

Take the first project, HRP-4C. It was gutsy of its creators to surround it with real-if-not-very-good dancers — but it was right on the line between gutsy and foolhardy, and I thought it stayed mostly on the foolhardy side. The Gizmodo blogger, on the other hand, found it “pretty amazing.” I’m not sure what they are seeing: its movements seem wooden and jerky, not that far advanced over the Disney audio-animatronics that I recall from my youth. And its voice? If we start from the fact that most pop music these days seems designed to make the singer sound synthetic in one way or another, it sounds great. But in any case, “amazing” suggests a pretty low bar.

Actroid-F is a different kettle of fish. Its abilities are more limited than HRP-4C’s, to be sure. But there are a few moments in the video where, if you had isolated them and told me it was an actress pretending to be a robot and not doing that well, I think I would have believed you. That Engadget headlined its post “Actroid-F: the angel of death robot coming to a hospital near you” makes me think that maybe there is something to the “uncanny valley” after all. (Full disclosure: I’m still rooting for some robotic version of Emily.)

It is only to be expected that in the not-so-distant future, these efforts will look as quaint as do the automata of the eighteenth century. But why, exactly? I can only imagine that our transhumanist friends must be somewhat conflicted about these humanoid robots. On one hand, they represent useful progress in areas that will help open doors to human redesign. But on the other hand, how shortsighted it must seem to spend so much effort on replicating those poorly designed meat machines we want to get rid of!

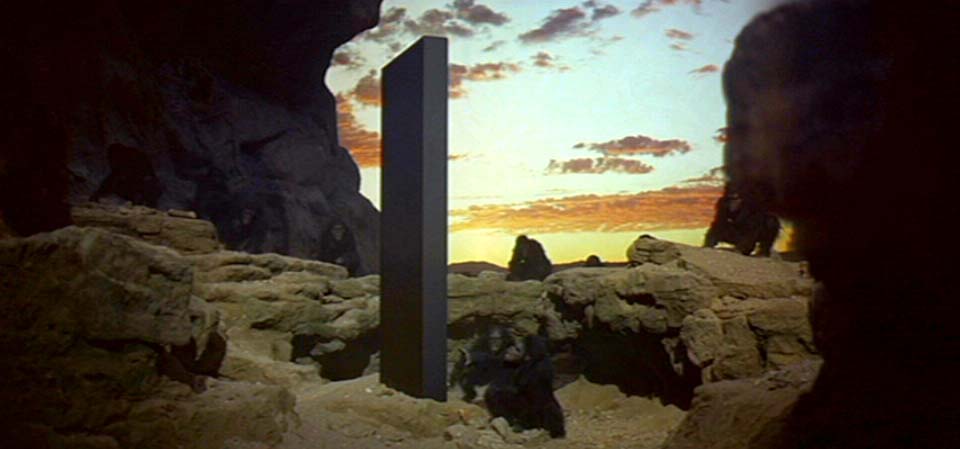

Or perhaps, on that ever-so-desirable third hand, these robots represent transitional forms in a process of making us comfortable working with our evolutionary successors. If transhumanists think we can climb out of the uncanny valley and create robots only an expert can appreciate as such (as in Blade Runner), they might consider those robots to be something like our monolith-transformed hominid ancestors in the opening sequence of Kubrick’s 2001: A Space Odyssey. Like those apes who haven’t seen the monolith, the humans living among these familiar looking beings may not appreciate the extent to which they are witnessing the dawn of some very new age — and as a result might soon get their heads bashed in. But I forgot — we will develop friendly AI.

Futurisms

November 2, 2010

Is developing Friendly AI not a good idea?

I think that it is probably better to think about friendly or moral AI than not to think about it, and probably better to try to implement it than to fail to do so. “Probably” because if the thinking and the implementation are not serious, we will have fooled ourselves and missed an important opportunity. I’ve been looking more and more into this matter, and I can’t yet pretend to have fully formed thoughts. But I am reasonably confident about this much: Serious moral reflection or serious reflection on the meaning of friendship will not start from the assumption that “there is no Right Answer. It’s all in our heads, all made up” and then move on to condescending parody of the idea that there might be an objective right and wrong. To put it another way, if there is no objective right and wrong, we had better make sure that this truth is not part of the “utility function” of friendly AI and hope that an insight into moral reality (or lack of same) that is so obvious to Mr. Anissimov will be (oddly) beyond the reach of superintelligence. Because otherwise, this truth itself, perhaps combined with mere observation of our moral discourse and behaviors, would constitute just the kind of “base motivation” that might make friendly AI think twice about just what one “friend” owes to another. Heck, even mere humans have come up with the idea that it would be a kindness to put the human race out of its misery.