Making Technological Miracles

Notice: Undefined index: gated in /opt/bitnami/apps/wordpress/htdocs/wp-content/themes/thenewatlantis/template-parts/cards/25wide.php on line 27

The case for curiosity-driven science — and a new way to think about R&D

World War II shapes how we think about science today more than does any other historical event of the last century, except perhaps for the Moon landing. Hiroshima and Nagasaki in particular stand as images — both awe-inspiring and horrific — of the raw power of scientific discovery. They have come to illustrate the profound stakes of the partnership between science and technology, and the partnership between science and government. With our growing scientific knowledge of nature, what technologies should we focus on developing? And what is the role of government in deciding this question — in regulating scientific research and directing it toward particular ends? There are no clearer examples than the Manhattan Project, and its direct descendant, the Apollo Program, of large-scale organized research, and of the government’s effectiveness in funding it and even steering it toward technological application — a collection of practices that historians have come to call “Big Science.”

Today, policymakers commonly call for “Manhattan projects” or “moonshots” to conquer major societal and technological challenges, from cancer to climate change. It has become part of the legacy of Big Science that the public image of scientists, as historian Clarence G. Lasby put it, “has generally been that of ‘miracle workers,’” a “prestigious image [that] has been translated into heightened political power and representation at the highest levels in government.” A number of iconic technologies were invented during World War II — including not just the atomic bomb but radar and the computer — in part owing to research sponsored and initiated by the government. Thus, many now see the iconic inventions of the war as offering this lesson: To accomplish great technological feats, we need government not only to fund research but also to direct research programs toward practical goals. Furthermore, so the argument goes, we must not waste funds on undirected, curiosity-driven science; its results are too often unpredictable, unusable, and even unreliable.

As we shall see, this is flawed historical reasoning. The bomb, radar, and the computer — to focus on only three of many examples — were made possible by a web of theoretical and technical developments that not only predated the war by years or decades but that also originated for the most part outside the scope of goal-directed research, whether government-sponsored or otherwise. The scientific insights that enabled the technological breakthroughs associated with World War II emerged not from practical goals but from curiosity-driven inquiry, in which serendipity sometimes played a decisive role.

The proposition that undirected research can generate enormous practical benefits was both preached and practiced by Vannevar Bush, a central character in the story of the invention of Big Science. Bush was the science advisor to President Franklin D. Roosevelt and director of the Office of Scientific Research and Development, a federal agency formed in 1941 to mobilize science during the war. The agency was pivotal to the American war effort, supporting the most successful of the government’s research enterprises — including the development of computers to aid in weapons guidance and cryptography, radar at M.I.T.’s Radiation Laboratory, and the Manhattan Project.

According to Bush, however, these technological advances were possible “only because we had a large backlog of scientific data accumulated through basic research in many scientific fields in the years before the war.” This idea — that undirected or “basic” science can pay technological dividends — would become central to Bush’s vision for post-war science policy. Just as pre-war research in basic science paved the way for inventions that aided the war effort, so too, Bush believed, could peace-time research in basic science enable future technological advances that would benefit society. This was the reasoning behind Bush’s vision of government-supported basic research — a vision that was realized, albeit only in part, by the creation of the National Science Foundation in 1950, which to this day supports basic research in a variety of scientific fields.

Bush’s ideas were controversial in his day, and remain so in our own. Writing in these pages, Daniel Sarewitz of Arizona State University has gone so far as to label Bush’s claim — that curiosity-driven research bears technological fruit — a “bald-faced but beautiful lie,” calling instead for science to be steered toward technological innovation. But a closer look at the beginnings of Big Science, and Bush’s hand in creating it, will help us to appreciate the long, complex, and often unpredictable paths by which discovery begets invention — a fact that is illustrated strikingly by the iconic technologies associated with the Second World War. If science is to yield technological benefits, history shows that we need a robust enterprise of basic, undirected science, in addition to more practically oriented research and development. Dwindling regard — and shrinking resources — for basic science today may well threaten our chances at moonshots in the future.

There is no question that the large-scale effort of the U.S. government led to the invention of nuclear weaponry — for which reason President Truman, in his speech announcing the use of the atomic bomb in 1945, called the Manhattan Project the “greatest achievement of organized science in history.” Yet, by the time the project was underway, physicists and chemists had been working on nuclear fission for nearly a decade, and many of the underlying scientific discoveries were much, much older, and were not directed toward practical goals.

In 1827, the Scottish botanist Robert Brown pointed his microscope at pollen grains suspended in water and observed tiny particles moving about in apparently random motions. The phenomenon, which came to be called Brownian motion, lacked an adequate explanation for almost eighty years. Then, in 1905, a twenty-six-year-old patent clerk in Switzerland published a paper showing that the behavior of the pollen grains resulted from the motion of invisible molecules. Einstein’s findings, experimentally confirmed by Jean Baptiste Perrin a few years later, offered credible evidence for the existence of molecules and thus the atomic theory of matter — something that had been hotly disputed for at least the previous century.

Also in 1905 — what has rightly been called Einstein’s annus mirabilis or “miracle year” — the young physicist published a paper postulating that radiation is made up of individual packets of energy, which he termed “light quanta.” In a letter, Einstein described this conclusion as “very revolutionary,” and so it was, as the photoelectric effect not only further confirmed the corpuscular theory — that light is made up of tiny particles — but also laid the foundation for quantum physics. That was not all. In yet another paper published that year, Einstein outlined his special theory of relativity, which established the equivalence between mass and energy, a finding that would later help scientists explain the release of energy from nuclear fission.

Over a century after Brown made his famous observations, in 1934, a group of scientists working at Enrico Fermi’s Radium Institute in Rome discovered something peculiar about uranium, the heaviest known element. What Fermi and his colleagues observed was that when they bombarded the atomic nuclei of uranium with neutrons, it appeared to create a new, heavier element — a “transuranic element.” In 1939, the German chemists Otto Hahn and Fritz Strassmann published a paper that sent shock waves through the physics community. Through careful observations of neutron bombardment, they came to realize that the phenomenon Fermi and his colleagues had observed was not, in fact, the creation of a heavier, transuranic element but rather the splitting of the uranium nucleus into lighter, radioactive pieces. “We are reluctant to take this step that contradicts all previous experiences of nuclear physics,” the chemists wrote.

The potential significance of these findings soon became clear. As Mary Jo Nye recounts in Before Big Science (1996), Otto Frisch was with his aunt Lise Meitner, both physicists, when she got the news. Frisch recalled how he and his aunt came to appreciate that the “most striking feature” of this “break-up of a uranium nucleus into two almost equal parts” was the “large energy liberated.” Meitner and Frisch would go on to provide a theoretical explanation of what Frisch called “fission,” adopting the term used by microbiologists to describe the division of bacterial cells. Working with American physicist John Wheeler at Princeton University, Niels Bohr determined that the fission of uranium was due to a rare isotope, uranium-235, warning his colleagues that these findings could be used “to make a bomb.” But “it would take the entire efforts of a nation to do it.”

In the months leading up to the outbreak of war, scientists from Paris to Manhattan were working on uranium, and a French team had published results confirming that an astoundingly energetic nuclear chain reaction was a real, if still theoretical, possibility. In light of these developments, Eugene Wigner and the Hungarian physicist Leo Szilard — who had wanted these findings to “be kept secret from the Germans” — urged Einstein to write a letter to President Roosevelt about the military implications of nuclear fission. Einstein’s letter led to the creation of the Advisory Committee on Uranium, which, in 1940, came under the purview of the National Defense Research Committee, a new federal organization led by Vannevar Bush. (The committee operated for a year before it was replaced by Bush’s Office of Scientific Research and Development.)

Initially skeptical of the idea of a nuclear weapons program, Bush was persuaded to take it more seriously by mounting scientific evidence of its feasibility, and he convened the members of the Uranium Committee in Washington, D.C. in late 1941 to explore the idea. In December, Japan bombed Pearl Harbor, and by June, writes Daniel J. Kevles in The Physicists, President Roosevelt “gave Bush the green light for a full-scale effort to build the bomb.”

The scientific discoveries that made possible the destruction of Hiroshima and Nagasaki three years later had taken place in a highly theoretical context, even if the scientists themselves were well aware of the practical implications. Crucially, the nuclear physics that developed in the decade leading up to the war was itself made possible by research in a wide range of domains, from pollen grains to photons, that led to the development of quantum physics in the first half of the century. The Manhattan Project is inconceivable without this rich history of undirected science preceding it.

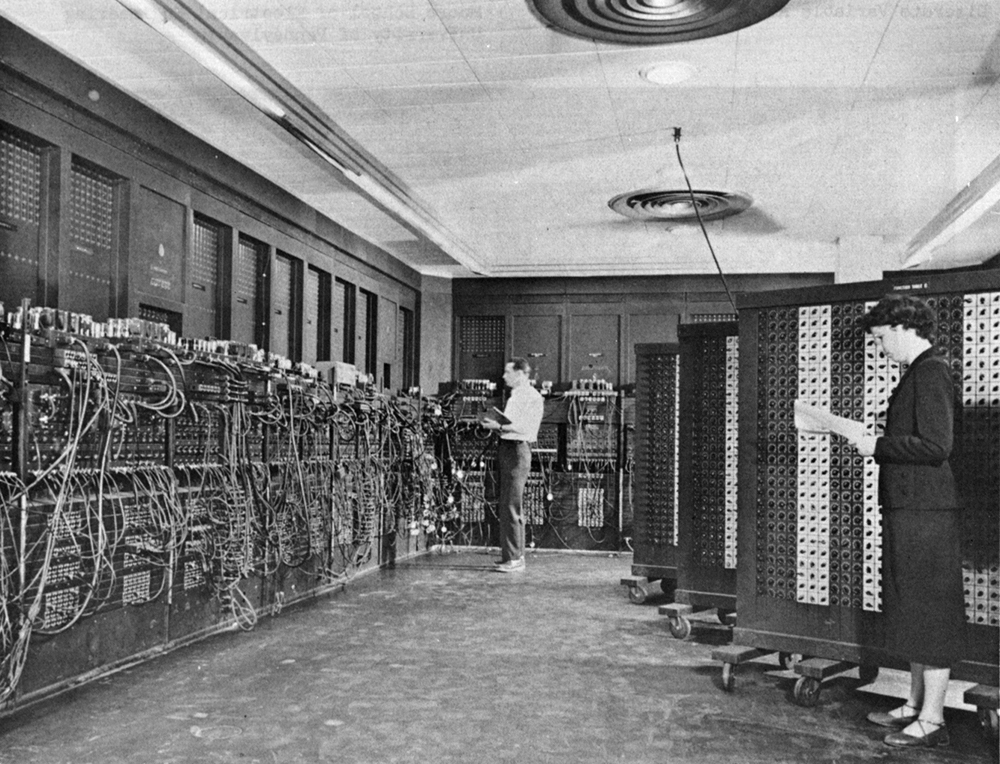

We can see a similarly long, complex process of discovery and invention in the history of another iconic technology of World War II, in which Bush also played a role. Like the bomb, the world’s first electronic computers — England’s Colossus and, shortly afterwards, America’s ENIAC — were born of government projects. But, also like the bomb, the underlying theoretical discoveries that made these inventions possible long predated the war and were not driven by practical goals.

In the 1830s, George Boole was only an adolescent, working as an usher at a boarding school in England, when he began to wonder whether the mathematics of algebra might be used to express the relations of formal logic. As William and Martha Kneale write in The Development of Logic (1962), Boole, who came from a family of modest means and was largely self-taught, would return to this question years later, spurred by a public controversy between two logicians over a technical problem, the “quantification of the predicate.”

In 1847, Boole published a slim volume titled The Mathematical Analysis of Logic, in which he outlined what he called a “Calculus of Logic” — a highly general and philosophically ambitious form of algebra remembered today for laying the foundations of modern “two-valued” or binary logic. When Boole wrote the book, he was not motivated by potential practical applications, taking himself to be describing the “mathematics of the human intellect.” But in 1937, nearly a century after Boole’s book, the American engineer Claude Shannon proved that Boole’s algebra could be applied to electrical relays and switching circuits — the concept that lies at the heart of modern digital computing.

The path from Boole’s discovery to Shannon’s insight was not linear. Indeed, Shannon was not the first to notice the practical implications of mathematical logic for computing. Experiments with calculating machines go back at least to the seventeenth century: Gottfried Wilhelm Leibniz, who had intuited the symmetry between algebra and logic later demonstrated by Boole, invented a machine capable of performing basic arithmetic operations. And in 1869, on the basis of Boole’s methods, the English logician William Stanley Jevons built a “logic piano,” a calculating machine resembling a small upright piano that to us “looks more like a cash register,” as the Kneales write. When Shannon published his own results, he was a graduate student at M.I.T., working on an analog mechanical computer called a differential analyzer, which had been invented a few years earlier by none other than Vannevar Bush.

Shannon’s own journey, too, is rich in serendipity. He was born in 1916 in a small Michigan town in the era of barbed-wire telegraphs — he once constructed a half-mile connection to a friend’s house. He studied electrical engineering and mathematics at the University of Michigan, where, just before graduating in 1936, he saw a posting for a position as research assistant at M.I.T. In one of those portentous coincidences of history, the job was to operate and maintain Bush’s differential analyzer — which had already earned the popular moniker “thinking machine.” As James Gleick chronicles in The Information (2011), it was while working on the differential analyzer, a hundred-ton electromechanical machine full of electrical relays, that Shannon realized that the state of each relay, “on” or “off,” could be represented mathematically using a binary algebra of zeros and ones. Bush encouraged Shannon’s research, urging him to specialize in mathematics rather than the more popular fields of electric motor drive and power transmission. The twenty-one-year-old Shannon would go on to write his master’s thesis on the electromechanical applications of Boolean algebra, now considered the foundation of digital circuit design.

After receiving his doctorate from M.I.T. in 1940, Shannon joined the Institute for Advanced Study in Princeton, New Jersey, then the home of Albert Einstein and some of the greatest living mathematicians, such as Hermann Weyl, John von Neumann, and Kurt Gödel. But in the summer of 1941, with American entry into the Second World War seeming imminent, Shannon went to work for Bell Telephone Laboratories to help contribute to the war effort. It was there that he met and occasionally discussed ideas with computing pioneer Alan Turing, who had been sent from England to the United States for a two-month stint in 1943 to consult with the U.S. military on cryptography. Besides cryptography, Shannon spent his time at Bell Labs working on applying communications theory to antiaircraft technology using an electromechanical computer — research supported by a major contract from Bush’s National Defense Research Committee.

In the post-war years, Shannon, like Bush, his mentor and former professor, would become one of America’s most prominent scientific celebrities — lauded, in particular, for his pioneering work in digital computing and information theory. But despite federal dollars and direction, his success is also a result of accidents of history and of the undirected, pioneering work of those who prepared his path.

The story of radar further illustrates how basic research can pay practical dividends years after foundational discoveries have been made. But it also illustrates the crucial role that curiosity and serendipity can play even in the context of applied, directed research — challenging the idea that science can simply be steered toward predetermined practical objectives.

The strategic value of radio communications had already been clear in the waning days of the First World War. But the scientific discoveries that made radio technology possible date back as far as the 1820s, when Michael Faraday demonstrated that an electrical current may be induced using a changing magnetic field. This offered experimental evidence that electricity and magnetism were not separate forces, as had long been believed, but instead interrelated phenomena. In 1864, James Clerk Maxwell produced a mathematical model for electromagnetism using a system of partial differential equations that would culminate in his famous two-volume A Treatise on Electricity and Magnetism of 1873. According to Maxwell’s theory, electricity and magnetism were not forces but fields propagated in a wave-like manner.

The concept of electromagnetic waves was controversial, and alternative theories sprang up, especially on the Continent. The most prominent among them was associated with the famous German scientist Hermann von Helmholtz. Helmholtz, whose laboratory attracted students from across the globe, had developed his own electrodynamic equations and postulated the existence of “atoms of electricity.” One of his students was Heinrich Hertz, who, much to his own surprise, experimentally confirmed the existence of electromagnetic waves. Helmholtz called the finding “the most important physical discovery of the century.” Before long, Guglielmo Marconi was able to demonstrate that Hertzian waves — or radio waves, as they came to be called — could be transmitted across long distances. By the 1920s — thanks also to the invention and refinement of the electron (or vacuum) tube — radio had become a popular form of mass communication.

In 1935, some forty years after Hertz’s confirmation of Maxwell’s theory, the British Air Ministry was eager to find still other uses for radio technology. It announced a contest with a prize of a thousand pounds for developing a “death ray” capable of killing a sheep at one hundred yards away. Officials at the Ministry sought advice on the practicality of such a weapon from Robert Watson-Watt (a descendant of inventor James Watt), who was an engineer and superintendent of Britain’s Radio Department of the National Physical Laboratory.

Watson-Watt, who had been studying radio for years, was skeptical of the idea, but tasked a young officer who worked for him with calculating the amount of radio energy needed to raise the temperature of eight pints of water — roughly the volume of blood in a human body — from 98 to 105 degrees Fahrenheit at a distance of one kilometer. Arnold Wilkins quickly found that such a feat was not remotely possible with existing technology. Wilkins, however, offered Watson-Watt another idea: that a powerful radio transmitter could be used to bounce radio waves off aircraft vessels miles away, allowing one to determine their precise location and track their movements. As Robert Buderi recounts the story in The Invention that Changed the World (1996), this idea had come to Wilkins while he was visiting, of all places, the post office, where he happened to hear postal workers complaining that their radio transmissions were disturbed by passing aircraft.

Watson-Watt realized that Wilkins’s insight could be combined with his own pioneering research on locating thunderstorms with the use of a rotating directional antenna linked to an oscilloscope to display the antenna’s output. He sent a memo about Wilkins’s idea to his superior, who was heading up the British government’s efforts to find new techniques to defend against enemy aircraft. Britain’s war department was at the time exploring every avenue for early aircraft detection, from balloon barrages and search lights to gigantic gramophone-style horns coupled with stethoscopes to listen for engines. Watson-Watt’s 1935 memo “Detection of Aircraft by Radio Methods” ignited a firestorm of activity and government funding. By the time war broke out, Great Britain’s coast was protected by an increasingly intricate network of radar stations, which the British called “Chain Home.”

One particularly thorny technical challenge that radar scientists were facing was how to improve precision. At the time, radio amplifiers could produce high power levels only at long wavelengths, yielding locational accuracy that could be off by miles. The solution came in 1939, from two physicists at the University of Birmingham who invented a device called a cavity magnetron that was both portable and could produce high-power microwaves. Although dozens of industrial research laboratories had begun the quest for a shorter-wavelength emitter back in the 1920s, all were focused on communications applications on the heels of the then-explosive growth in the radio business. As one history of the magnetron puts it, “Like many other disruptive breakthroughs, the cavity magnetron was the result of a number of related explorations, in technology, in experiment, and in theory.”

The British magnetron was kept secret until 1940, when Henry Tizard, head of the Aeronautical Research Committee, led a delegation to collaborate on military research with the United States, where a similar device had been invented independently. (Other, unsuccessful prototypes were also invented in Japan, Germany, and Russia.) But the British version achieved nearly a thousand times greater output and could be manufactured at scale.

With funding from Vannevar Bush’s National Defense Research Committee, the Radiation Laboratory was launched at M.I.T. to help develop the technology into an airborne system that Britain would be able to deploy as soon as possible. The U.S. government would go on to spend $1.5 billion to develop radar technologies at M.I.T. — three-quarters as much as it spent on the Manhattan Project. By the end of the war, the “Rad Lab” directly employed almost four thousand people and had invented a wide range of radar systems that proved pivotal to the war effort, aiding with aircraft navigation, radar countermeasures, strategic bombing, and accurate detection of aircraft and — critically — of submarines.

Radar arguably stimulated far more technologies that were immediately practical in the post-war period than did any other invention of the war, entering commercial markets at a furious pace, including in civilian aviation and marine navigation. Radar research also enabled a vast array of subsequent inventions, from cable-free high-bandwidth microwave communications — used to this day for long-distance information transport — to semiconductors that led directly to the transistor.

As with the bomb and the computer, government directives and funding played crucial roles in the development of radar. It is significant, however, that even such directed research did not always follow its predetermined objective. In the case of the death-ray challenge, that turned out to be a good thing. Moreover, neither radar nor the many other inventions it spawned, during and after the war, would have been possible without the scientific discoveries made long before by Faraday, Maxwell, Hertz, and others.

World War II saw unprecedented levels of government support for scientific research, with federal dollars making up over 80 percent of all spending on research and development in America by war’s end. Vannevar Bush, however, was worried that after the war the government’s generosity would decline. At the same time, he worried that whatever federal support would continue during peacetime would also remain controlled by government, directed toward practical application. Although Bush knew firsthand that government control was critical for producing many of the technologies that helped secure Allied victory, he also knew firsthand that such technological success was possible thanks in no small part to discoveries in “basic science” made before the war.

Bush explained his reasoning in his July 1945 report to President Truman, Science, the Endless Frontier, perhaps the most famous science policy document ever written. As he pointed out, “most of the war research has involved the application of existing scientific knowledge to the problems of war.” But, he warned,

we must proceed with caution in carrying over the methods which work in wartime to the very different conditions of peace. We must remove the rigid controls which we have had to impose, and recover freedom of inquiry and that healthy competitive scientific spirit so necessary for expansion of the frontiers of scientific knowledge.

Wartime research and development was successful in part because of the “scientific capital” accrued prior to the war by scientists who were free from obligations to pursue practical applications. Now that the war was over, he argued, what was needed was more knowledge — more basic science — not just more applied research and development. Basic science

creates the fund from which the practical applications of knowledge must be drawn. New products and new processes do not appear full-grown. They are founded on new principles and new conceptions, which in turn are painstakingly developed by research in the purest realms of science.

Today, it is truer than ever that basic research is the pacemaker of technological progress.

The argument was controversial, and Bush, a Republican, didn’t fully get his way, as Democrats called for more, not less, government control of science. They saw more government control as a solution to another problem: the growing interdependence of science and industry. Industrial research had exploded during the interwar period, “only slightly inhibited” by the Great Depression, as historian Kendall Birr observed. From 1927 to 1938, the number of industrial research laboratories in the United States roughly doubled, from 1,000 to 1,769, as did the number of their employees, from 19,000 to 44,000. The companies funding this work, such as AT&T, were suspected of using it to solidify their market advantage, thus increasing economic concentration at the expense of the consumer.

At the beginning of the war, industrial research was already highly concentrated in a minority of corporate laboratories. By the end of the war, observes Daniel Kevles, the concentration had become even more pronounced, with 66 percent of federal investment in research and development going to sixty-eight companies, and 40 percent to just ten. According to Senator Harley M. Kilgore, a die-hard New Dealer, science was becoming a mere “handmaiden for corporate or industrial research.”

The accusation proved politically powerful. On the Senate floor in 1943, Thurman Arnold, a federal judge and former assistant attorney general for the Department of Justice’s Antitrust Division, called on the government to “break the corner on research and experimentation now enjoyed by private groups.” The science editor of the New York Times wrote that “laissez-faire has been abandoned as an economic principle; it should also be abandoned, at least as a matter of government policy, in science.” At the end of 1945, Senator Kilgore was calling for the creation of a National Science Foundation to oversee and direct all federal research to socially desirable ends.

Although Bush was no fan of the New Deal, he shared Kilgore’s worries about economic concentration and industry control of science. But he balked at the contention that scientific research should instead be controlled by the government. At issue, he wrote to presidential adviser Bernard Baruch, was “whether science in this country is going to be supported or whether it is also going to be controlled.” It needed support, in other words, but control, whether by industry or government, risked stifling the discovery of new knowledge. As Bush argued in Science, the Endless Frontier, “industry is generally inhibited by preconceived goals” and by the “constant pressure of commercial necessity.” For similar reasons, science should not be controlled by government either. Though subject to political rather than commercial pressures, government was, like industry, concerned with the “application of existing scientific knowledge to practical problems,” rather than with “expanding the frontiers of scientific knowledge.”

Whereas Senator Kilgore’s proposed National Science Foundation would direct federal research for particular applications, Bush proposed an alternative: a National Research Foundation that would instead disperse funds for basic research. He laid out his plan in Science, the Endless Frontier. According to his telling, the origin of this report was a conversation with President Roosevelt, during which Bush expressed concern about the fate of federal science after the war. In truth, he had suggested the idea to the president precisely to counter Kilgore’s program. Roosevelt formally requested the report in 1944. In 1945, it was submitted to President Truman and released to the public — only a few days before the release of Kilgore’s plan.

In Bush’s plan, the foundation would not itself direct or oversee any research, but would instead fund a diverse array of non-governmental institutions, “principally the colleges, universities, and research institutes.” As long as these “centers of basic research” were “vigorous and healthy,” Bush argued, “there will be a flow of new scientific knowledge to those who can apply it to practical problems in Government, in industry, or elsewhere.” In contrast to Kilgore’s plan, “internal control of policy, personnel, and the method and scope of research” would be left “to the institutions in which it is carried on.” For Bush, it is when science is free, rather than controlled — whether by government or industry — that it is most likely to flourish, ultimately yielding surprising insights that might one day prove useful.

The political fight over the creation of a new federal science agency continued for several years. In 1950, President Truman finally signed a bill creating the National Science Foundation. Despite using Kilgore’s suggested name, the new agency reflected Bush’s vision in some important respects, including his emphasis on basic research. But there were at least two significant caveats. The director would be appointed by the president, as Kilgore demanded, in contrast to Bush’s plan to have the director elected by the foundation’s members — Truman had vetoed an earlier bill that excluded this provision. And the foundation would have power to set the overall research agenda, rather than deferring entirely to the research institutions supported by the foundation.

In its design, the National Science Foundation clearly bore the imprint both of Bush’s support for applied research during the war and of his post-war paeans to basic science. But his broader vision for federal support of undirected research was never fully realized. With the growth of national laboratories and the rise of Cold War research, the foundation’s role became a shadow of what Bush had imagined. As he explained, he had wanted an “over-all agency” that would act as a “focal point within the Government for a concerted program of assisting scientific research conducted outside of Government.”

In fact, however, the NSF did not become the government’s principal sponsor of research — nor even of the majority of federally funded research. In the immediate post-war period, the NSF budget was quickly dwarfed by the budgets of the new Office of Naval Research, which had reneged on a pledge to transfer basic research over to the NSF, and by the Atomic Energy Commission, which conducted its own basic research in the expanding field of nuclear physics. Even medical research that during the war had been under the purview of Bush’s Office of Scientific Research and Development was moved into the already existing National Institutes of Health. So by the time of its creation, the NSF was, as Kevles puts it, “only a puny partner in an institutionally pluralist federal research establishment.”

Today, seventy-five years since Vannevar Bush’s report to the president, support for basic science comprises a declining share of overall U.S. government research spending, the vast majority of which is goal-directed. Furthermore, roughly two-thirds of all funding for science in the United States comes from industry rather than government — flipping the ratio from about a decade or so after World War II. This means that both public and private dollars increasingly support practical and directed, rather than basic or undirected, “curiosity-driven,” research.

As in Bush’s day, there are many today who believe, quite understandably, that “you’ve got to deliver a benefit to society that’s commensurate with that [research] investment,” as Thom Mason, director of Oak Ridge National Laboratory, has put it. And Daniel Sarewitz has argued that Bush’s idea that curiosity-driven research bears technological fruit is a “beautiful lie” — a lie that has not benefited society but instead created science that is increasingly useless and even unreliable. Although Sarewitz concedes that science has been important for technological development, he argues that “the miracles of modernity,” including digital computing and nuclear power, “came not from ‘the free play of free intellects,’ but from the leashing of scientific creativity to the technological needs of the U.S. Department of Defense.”

The central issue now is essentially the same as it was in the time of Bush: Should federal science be directed toward specific, practical objectives or free to pursue its own ends? As we’ve seen, the history of some of the most iconic inventions during World War II — including, indeed, digital computing and nuclear power — if it does not settle the question for all time, does lend important support to Bush’s vision. These extraordinary technological feats were possible in part because of “a large backlog of scientific data accumulated through basic research.”

It’s worth noting that Bush, though he was the most vigorous cheerleader for basic science, was convinced of its importance not on theoretical or historical grounds, but because of his personal experience. “I’m no scientist, I’m an engineer,” he liked to say. As a young man, he had excelled in mathematics but preferred the more practical path of invention, receiving a joint doctorate in engineering from M.I.T. and Harvard in 1916. It was his experience as an electrical engineer that seems to have convinced him of the value of basic research: During the First World War, he joined an effort to develop a device for detecting submarines — an experience, he later said, that “forced into my mind pretty solidly the complete lack of proper liaison between the military and the civilian in the development of weapons in time of war, and what that lack meant.” As founder and director of the Office of Scientific Research and Development during World War II, Bush would have a chance to test his own solution to this problem.

After the war, he was appointed professor of power transmission at M.I.T.’s department of electrical engineering and later became dean of engineering and vice president of the university. During that time, Bush also helped his friend and college roommate Laurence K. Marshall launch a business venture focusing on refrigeration and electronics technology, the Raytheon Corporation, which would later become a large defense contractor.

Bush’s career reflects the historical reality that by the early twentieth century, science and technology had become interdependent and mutually reinforcing enterprises. As a researcher in fields that spanned mathematics, computing, and engineering, an entrepreneur with multiple patentable inventions to his name, and a federal bureaucrat who led a wartime agency that oversaw the development of advanced computing techniques, radar, and the bomb, Bush was living proof of this reality. But it does not follow from this historical fact that science and technology therefore constitute a uniform enterprise, one that can simply be “directed” toward whatever practical objectives we wish. What Bush seems to have intuited, and what history seems to validate, is rather that scientific knowledge had become indispensable for modern technological advance — and specifically that theoretical discoveries could, over time, generate enormous practical benefits. This central claim of Bush’s is powerfully illustrated by the application of electromagnetism, quantum and nuclear physics, and mathematical logic in the wartime research on radar, nuclear energy, and computing supported by the very federal agency he led.

With federal support for basic science shrinking relative to applied research and development, it may now be time to reconsider the principles governing our national science policy. As we do, we would do well to heed history’s lesson that innovation in the public interest often occurs because of research done without interest — that technological marvels may need a scientific enterprise free to pursue its own aims, and amply funded to do so.

During Covid, The New Atlantis has offered an independent alternative. In this unsettled moment, we need your help to continue.