Thanks for the shoutout and the kind words, Adam, about my review of Kurzweil’s latest book. I’ll take a stab at answering the question you posed:

I wonder how far Ari and [Edward] Feser would be willing to concede that the AI project might get someday, notwithstanding the faulty theoretical arguments sometimes made on its behalf…. Set aside questions of consciousness and internal states; how good will these machines get at mimicking consciousness, intelligence, humanness?

Allow me to come at this question by looking instead the big-picture view you explicitly asked me to avoid — and forgive me, readers, for approaching this rather informally. What follows is in some sense a brief update on my thinking on questions I first explored in my long 2009 essay on AI.

The big question can be put this way: Can the mind be replicated, at least to a degree that will satisfy any reasonable person that we have mastered the principles that make it work and can control the same? A comparison AI proponents often bring up is that we’ve recreated flying without replicating the bird — and in the process figured out how to do it much faster than birds. This point is useful for focusing AI discussions on the practical. But unlike many of those who make this comparison, I think most educated folk would recognize that the large majority of what makes the mind the mind has yet to be mastered and magnified in the way that flying has, even if many of its defining functions have been.

So, can all of the mind’s functions be recreated in a controllable way? I’ve long felt the answer must be yes, at least in theory. The reason is that, whatever the mind itself is — regardless of whether it is entirely physical — it seems certain to at least have entirely physical causes. (Even if these physical causes might result in non-physical causes, like free will.) Therefore, those original physical causes ought to be subject to physical understanding, manipulation, and recreation of a sort, just as with birds and flying.

The prospect of many mental tasks being automated on a computer should be unsurprising, and to an extent not even unsettling to a “folk psychological” view of free will and first-person awareness. I say this because one of the great powers of consciousness is to make habits of its own patterns of thought, to the point that they can be performed with minimal to no conscious awareness; not only tasks, skills, and knowledge, but even emotions, intuitive reasoning, and perception can be understood to some extent as products of habitualized consciousness. So it shouldn’t be surprising that we can make explicit again some of those specific habits of mind, even ones like perception that seem prior to consciousness, in a way that’s amenable to proceduralization.

The question is how many of the things our mind does can be tackled in this way. In a sense, many of the feats of AI have been continuing the trend established by mechanization long before — of having machines take over human tasks but in a machinelike way, without necessarily understanding or mastering the way humans do things. One could make a case, as Mark Halpern has in The New Atlantis, that the intelligence we seem to see in many of AI’s showiest successes — driverless cars, supercomputers winning chess and Jeopardy! — may be better understood as belonging to the human programmers than the computers themselves. If that’s true, then artificial intelligence thus far would have to be considered more a matter of advances in (human) artifice than in (computer) intelligence.

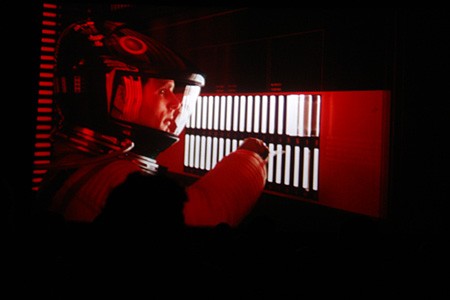

It will be curious to see how much further those methods can go without AI researchers having to return to attempting to understand human intelligence on its own terms. In that sense, perhaps the biggest, most elusive goal for AI is whether it can create (whether by replicating consciousness or not) a generalized artificial intelligence — not the big accretion of specifically tailored programs we have now, but a program that, like our mind, is able to tackle just about any and every problem that is put before it, only far better than we can. (That’s setting aside the question of how we could control such a powerful entity to suit our preferred ends — which despite what the Friendly AI folks say, sounds like a contradiction in terms.)

So, to Adam’s original question: “practically speaking … how good will these machines get at mimicking consciousness, intelligence, humanness?” I just don’t know, and I don’t think anyone intelligently can say that they do. I do know that almost all of the prominent AI predictions turn out to be grossly optimistic in their time scale, but, as Kurzweil rightly points out, a large number that once seemed impossible have been conquered. Who’s to say how much further that line will progress — how many functions of the mind will be recreated before some limit is reached, if one is at all? One has to approach and criticize particular AI techniques; it’s much harder to competently engage in generalized speculation about what AI might someday be able to achieve or not.

So let me engage in some more of that speculation. My view is that the functions of the mind that require the most active intervention of consciousness to carry out — the ones that are the least amenable to habituation — will be among the last to fall to AI, if they do at all (although basic acts of perception remain famously difficult as well). The most obvious examples are highly creative acts and deeply engaged conversation. These have been imitated by AI, but poorly.

Many philosophers of mind have tried to put this the other way around by devising thought experiments about programs that completely imitate, say, natural language recognition, and then arguing that such a program could appear conscious without actually being so. Searle’s Chinese Room is the most famous among many such arguments. But Searle et al. seem to put an awful lot into that assumption: can we really imagine how it would be possible to replicate something like open-ended conversation (to pick a harder example) without also replicating consciousness? And if we could replicate much or all of the functionality of the mind without its first-person experience and free will, then wouldn’t that actually end up all but evacuating our view of consciousness? Whatever you make of the validity of Searle’s argument, contrary to the claims of Kurzweil and other of his critics, the Chinese Room is a remarkably tepid defense of consciousness.

This is the really big outstanding question about consciousness and AI, as I see it. The idea that our first-person experiences are illusory, or are real but play no causal role in our behavior, so deeply defies intuition that it seems to require an extreme degree of proof which hasn’t yet been met. But the causal closure of the physical world seems to demand an equally high burden of proof to overturn.

If you accept compatibilism, this isn’t a problem — and many philosophers do these days, including our own Ray Tallis. But for the sake of not letting this post get any longer, I’ll just say that I have yet to see any satisfying case for compatibilism that doesn’t amount to making our actions determined by physics but telling us don’t worry, it’s what you wanted anyway.

I remain of the position that one or the other of free will and the causal closure of the physical world will have to give; but I’m agnostic as to which it will be. If we do end up creating the AI-managed utopia that frees us from our present toiling material condition, that liberation may have to come at the minorly ironic expense of discovering that we are actually enslaved.